Finding the most important pieces of information in a mass of data has always been a key task in any job. In network science the tools used to do this are known as “centrality measures“. My most recent paper (with Bingsheng Chen) looks at the relationship between two of the oldest and most important examples, degree and closeness.

Networks (for example see Coscia 2021) are a powerful way to organise the large data sets faced by modern researchers in many fields, from physics through biology to social sciences. In their simplest form, the objects of interest are represented by nodes of a network. So a node might represdent proteins in biology or people in social science. The edges of a network then represent relationships between pairs of nodes.

One of the most common questions we ask about our data is what are the most important pieces of information? In a network representation, this corresponds to finding the most important nodes. Not surprisingly, this was one of the earliest problems tackled in network science where the importance of nodes is measured by centrality measures mentioned above. These have been developed in social network analysis since the 1950’s. The name centrality measure comes from an analogy with a city where most important institutions are found at the geographical centre of a city to enable the easiest access for the largest number of people. The arrival of large electronic datasets prompted a flurry of new research on centrality measures. For instance, in 1998 the founders of Google developed their own centrality measure, PageRank (Brin & Page 1998). This uses a simple diffusion process on a network of webpages (nodes) connected by hyperlinks (edges) with the idea that relevant pages in a search can be placed in order of their importance. The web page with the highest PageRank going top of the list returned by Google.

Finding the most important nodes is such a critical job that this problem has been studied many times and this has produced hundreds (if not thousands) of centrality measures. These encode different interpretations of what is the “importance” of a node and perhaps the context of the data and of the application may point a researcher to one definition over another. Nevertheless, one is left with the feeling that there are so many centrality measures that many may well be producing similar information and therefore there is a large redundancy in the centrality measures in the literature. For instance, David Schoch produced a periodic table of centrality measures, a nice visualisation of a large number of centrality measures placing similar ones close together imitating the periodic table of elements. To be more quantitative, one can measure the correlations between different values for centrality measures for the same node. What one finds from these studies is that there are clear correlations and yet no clear pattern has emerged. As Valente et al. (2008) put it, “The amount of correlation between degree, betweenness, closeness, and eigenvector indicates that these measures are distinct, yet conceptually related”. This leaves researchers uncertain what to do when choosing a centrality measure for their problem.

Our Work

In my work with Bingsheng Chen (Evans & Chen 2022) we looked at two of the oldest and most popular centrality measures: degree and closeness (Bavelas, 1950) defined closeness. The degree kv of a node v is simply the number of nearest neighbours, the number of nodes connected by a single edge to the node v. Closeness is defined in terms of the length of the shortest path between two nodes. A path in a network is just a sequence of nodes where each node is connected by an edge to the previous node in the sequence. The length is the number of edges traversed as you move along the path. You can then calculate the average distance from a node v to all other nodes. The smaller this average value of distance is, the closer node v is to the other nodes in a network. So a low value of this average indicates which nodes are the most central and, using the analogy of a city mentioned above, we might define these to be most important. Centrality measures are defined so that the larger the value of a centrality measure, the more important a node is. So the centrality measure closeness cv for a node v is defined to be the inverse of the average distance to all other nodes.

What we suggested in our Communications Physics paper (Evans & Chen, 2022) is that you can do better for degree and closeness than the qualitative statement of Valente et al. (2008) that they are “conceptually related”.

In our work, we picture the shortest paths used to calculate closeness as a tree which is a network with no loops and where there is only one path between any two nodes. In our case, our shortest-path tree for node v has every node from the original network, the edges in the tree are a subset of the edges in the original network, and the path in the tree from the root node v to any other node is always a shortest path in the full network. Such trees can always be defined for each node though they are not in general unique. Shortest-path trees are easily created using standard breadth-first search algorithms.

Our key algebraic assumption is that the branches of these trees will look statistically similar for most networks. The reason for this is that the number of nodes at distance L from the root grows exponentially in most networks. This is the reason why we see the phenomena of six degrees of separation in most networks. That is, even in very large networks, the length of the shortest path from any node to any other node is usually small, typically of order log(N) (where N is the number of nodes in the network) rather than N1/D, you would expect if the network is in a D-dimensional Euclidean space (e.g. if our network was a regular cubic lattice so D=3).

Outline of Derivation

You can skip to the next section on results but I will give a brief outline of the analytical part of the work.

We used the simplest model for the branches of the tree. Namely, we assume that on average the degree of each node in the tree is z. That is one edge links every node to the parent node that is one step closer to the root node v, while the remaining (z-1) nodes are one step further away. Given that this means the number of nodes which are L steps away from the root is growing in proportion to (z-1)L i.e. exponentially fast, we have to stop the growth somewhere and we do so by demanding that the total number of nodes in the tree is N, the number of nodes in the original network Since we know that the distance in the tree from any node to the root node is along a shortest path in the full network, we can use the shortest path tree to calculate the average distance from v to all other nodes and hence its inverse, the closeness centrality measure cv for node v.

So far the degree of node v has not appeared in our calculation. However, the first step of the shortest path tree must be to the nearest neighbours. So the shortest path tree from node v starts with kv branches and after that we assume these branches are all statistically equivalent and the degree in the rest of the tree is on average z.

Analytical Result

Putting this all together gives us our central result that the inverse of closeness is a simply b + ln(kv)/ln(z-1) and we have an expression for b in terms of the number of nodes and the average degree of the tree z . In general, it is hard to determine the average degree of the tree z in all but the simplest network models so in practice, we treat z as free parameter which we fit to the data. Since we usually have large numbers of nodes, hundreds and often many many more, we lose little by treating b as a free parameter too.

The only reason we can do these calculations is that we have made some sweeping generalisations in order to reduce our networks to simple trees which represent branching processes used widely in physics and science in general. Given the simple pictured used here, it would not be unreasonable to assume that even if our relationship between degree and closeness works for some simple numerical network models, the relationship will fail badly on most real-world networks. And yet, if there is one thing our experience as physicists has taught us, it is that there are situations where we can ignore the messy microscopic details and still arrive at results that are broadly true on large scales across many different systems. The derivation of the ideal gas law using ideas from statistical physics would be a classic example. So how good is our result on real examples?

Results from Real Networks

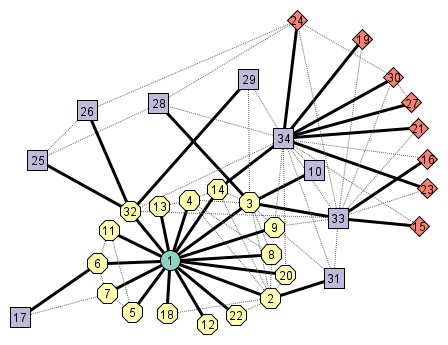

The answer turns out that our relationship works far better than we thought it would do. On three simple artificial models for networks, we get fits with reduced chi-square values which are between 1.0 and 1.3. The good fit is clear visually in the figure above. That is great but the real surprise is when we tried it on over one hundred networks based on real data. One of our referees suggested that since we had the code working for an initial set of 18 real-world networks, it would be relatively easy to look at more networks. So for the published version we used a python package graph-tool to download all the networks kept in the Netzschleude repository (both are maintained by Tiago Peixoto) and we processed all of the networks there provided no further programming effort was required. A number of these networks were too big for our code or had some technical issue that broke our code (perhaps non-ASCII characters), but we ended up looking at a further 112 networks from real-world data sets without imposing any further bias. To our surprise that we still found our relationship held well (reduced chi-square of less than 2.0) for around two-thirds of these networks, while around 80% had a reduced chi-square below 3.0. Of those that failed, a quick look at their description would tell us that they were always unlikely to work well: they were very small, or very dense (that is every node is connected to most of the other nodes) or had some other special structural feature not covered in our simple picture.

Conclusions

So what does our result do for network science? First, our relationship is a clear quantitative example of how two centrality measures are related, in this case degree and closeness, two of the oldest and best-known examples. The fact that our relationship is non-linear highlights the fact that most previous studies look at possible linear correlations between centrality measures, e.g. by using Pearson correlation coefficients. If non-linear relationships exist between centrality measures, these would not be so clear and this might explain why the previous studies have not reached any very specific conclusions. Second, our results tell researchers that, to a first approximation, we can forget closeness as most of the information is already in the degree of a node which is much quicker to calculate. However, the corollary of this is that if you do calculate closeness, you can now remove the general dependence of closeness on degree by comparing the measured value against the predicted value made using our formula. The difference from our predicted value of closeness is genuine new information in the closeness measurement that is not given by the degree value.

The final message is that the success of our method reminds us that for most networks, the exponential growth in the number of nodes as a function of the distance L from any node is the fundamental property that makes complex networks distinct from systems constrained by our three-dimensional world.

Note

This post is a revised version of one I wrote for the Nature Portfolio Physics Community entitled “Network Centrality“.

References

Bavelas, A. 1950. Communication patterns in task-oriented groups. The Journal of the Acoustical Society of America 22, 725–730.

Brin, S. & Page, L. 1998. The anatomy of a large-scale hypertextual Web search engine. Computer Networks and ISDN systems, 30, 1–7.

Coscia, M. 2021. The Atlas for the Aspiring Network Scientist. https://doi.org/10.48550/arXiv.2101.00863

Evans, T. S. & Chen, B., 2022. Linking the Network Centrality Measures Closeness and Degree. Communications Physics 5, 172, https://doi.org/10.1038/s42005-022-00949-5 .

Schoch, D. 2016. Periodic table of network centrality. URL http://schochastics.net/sna/periodic.html

Valente, T. W., Coronges, K., Lakon, C. & Costenbader, E. 2008. How correlated are network centrality measures? Connections 28, 16.